How will advances in digital technology and Artificial Intelligence affect the aviation industry? Can we harness their potential to improve operational efficiency and safety in the sector? How can we facilitate this from a regulatory perspective?

The rapid advancements in digital technology and Artificial Intelligence (AI) have captivated the media and entered the mainstream consciousness. From fabricated images of renowned personalities to chatbots capable of generating incredibly convincing fictional texts, we have witnessed the immense potential of these innovative technologies, as well as their associated pitfalls. These tools, such as ChatGPT, Bard, Stable Diffusion, Midjourney, and DALL-E, are now readily available to the global population, with increasing accessibility even to non-technical users. Consequently, it is conceivable that these advancements may extend beyond their current niche, offering numerous use cases across industries.

While the aviation industry has shown a keen interest in adopting these novel technologies, it is crucial to recognize the potential risks inherent in their unregulated and non-standardized implementation within critical processes. However, it is important not to succumb to alarmist notions that AI technology is spiralling out of control. Rather, we should understand these technologies as enablers, merely tools that can assist us. The true risk lies in the human dimension and our ability to interact with these technologies in a safe and sensible manner.

In fact, various studies have shed light on behavioural patterns in human-machine interactions that may give rise to risks. One such pattern is known as “automation bias”, which describes the tendency for a high level of automation to diminish vigilance. For instance, pilots relying on autopilot may exhibit reduced attentiveness. Furthermore, the lack of comprehension regarding the decision-making processes of these technologies or the inclination to view machines as infallible, objective, and accurate can cloud users' judgment, introducing risks when adopting these technologies.

In light of this reality, policymakers face the challenge of determining how to regulate these emerging technologies and to what extent. Striking the right balance is essential to harness their potential without compromising operational efficiency and safety. Regulation should focus on promoting transparency, accountability, and comprehensive understanding of these technologies. By establishing guidelines that ensure responsible and ethical use, policymakers can facilitate their integration into the aviation industry while safeguarding against potential risks.

To regulate AI or not to regulate, that is the question

Regulating new and emerging technologies, particularly AI, presents an unprecedented challenge. The complexity of regulating any new technology is well-known, with recent examples such as unmanned aerial vehicles (UAVs) or remote towers serving as reminders. The integration of these technologies and their impact on safety and security require thorough analysis. However, the challenge of AI regulation surpasses these complexities due to its ever-evolving nature, making it challenging to establish a stable legal definition that balances benefits and minimizes risks.

One of the primary obstacles in regulating AI is the lack of a precise definition. AI technologies continue to undergo continuous and dynamic evolution, rendering it difficult to create a legal framework that adequately addresses its multifaceted aspects. This dynamic nature also poses challenges to regulatory processes, which are typically slow to adapt. By the time new laws are introduced, they may already be outdated, resulting in legal barriers to reaping the benefits of AI and potentially creating loopholes that introduce risks. Anticipatory regulation of rapidly evolving technologies can also lead to unanticipated consequences. The initial information available during the regulatory formulation may not encompass all potential outcomes, leading to unintended effects.

Given these limitations, the question arises: is it truly necessary to establish new AI-specific regulations? In reality, many potential issues stemming from AI-based systems may already fall under existing regulatory frameworks. For instance, operational safety concerns related to AI systems can be addressed through established Safety Management Systems (SMS) frameworks, considering potential operational hazards introduced due to integration of AI-based systems within specific workflows. Similarly, potential applications such as fuel or operational management Use Cases could be regulated using governance and safety frameworks already in place for non-AI-based software. Nonetheless, there are for sure some specific dimensions to be potentially regulated, as is the transparency, robustness, and ethics of AI developments or certification approach for non-deterministic technologies.

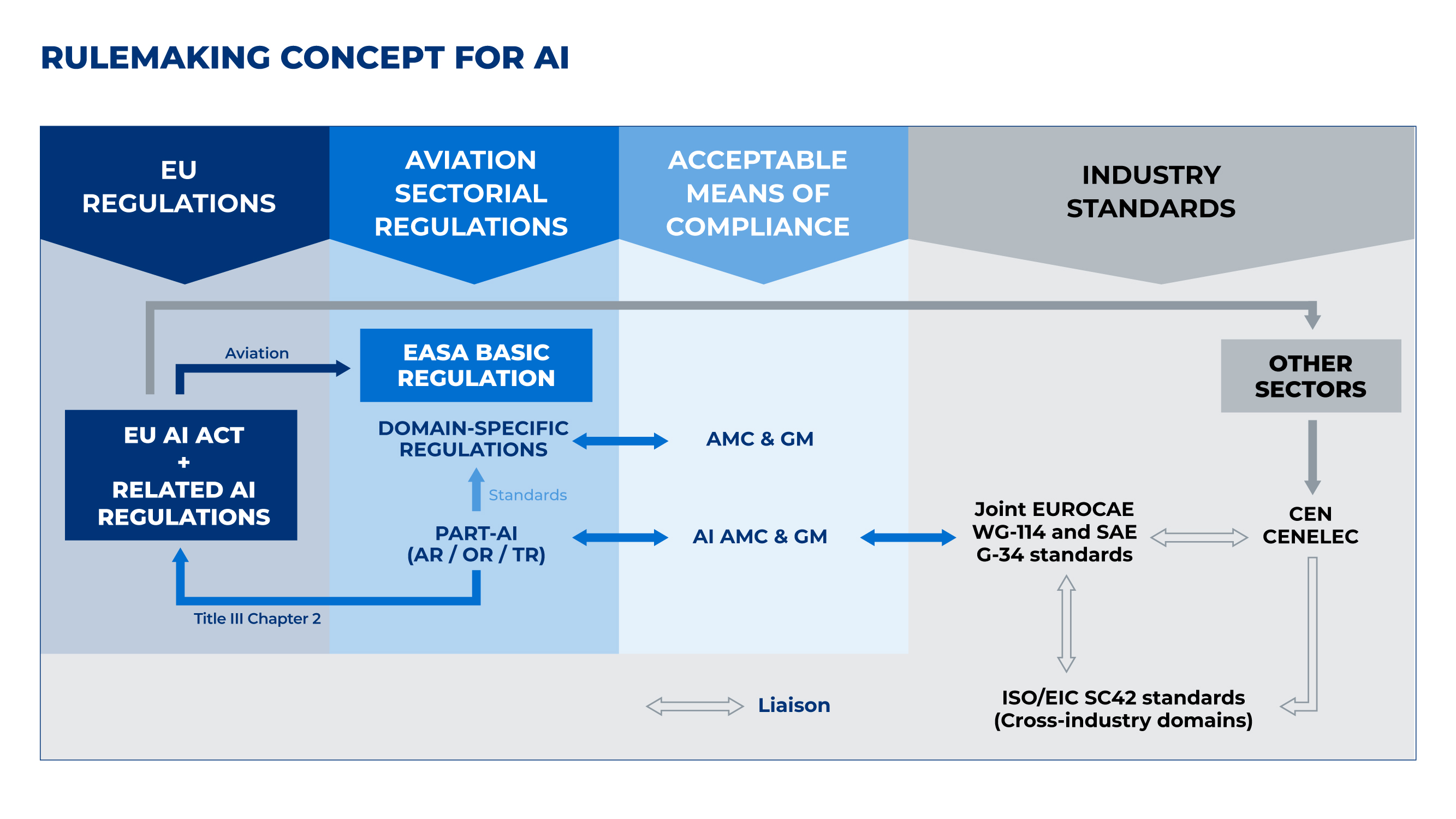

With all this, we come to the big question: what is the right balance between regulation and non-regulation, and how should it be articulated? If we start from the premise that non-regulation introduces risks such as those mentioned above and that traditional and “hard” regulation may not work, a potential approach to the problem is “soft laws”. That is, establishing a framework of basic rules and standards under which new developments should be built. A flexible control framework that can adapt quickly to new technologies but that, at the same time, allows some control and supervision over developments. This framework has to be accompanied by an empowerment of the regulatory and oversight functions, translated into training of inspectors and experts of the Competent Authorities to ensure the correct adoption of the principles and guidelines established in the soft laws, for their effective implementation. This is the line being pursued by Europe and EASA in regulatory matters.

The AI European framework and EASA’s approach to the aviation industry

In recent years, the European Union has been diligently working on establishing a new legal framework to facilitate standards that promote the responsible development and use of Artificial Intelligence (AI). The proposed legislation, known as the “Artificial Intelligence (AI) Act”, put forth by the European Commission in 2021, aims to assess AI-based advancements based on their specific applications. It demands varying degrees of requirements in terms of data quality, transparency, human oversight, and accountability, commensurate with the expected risk associated with each Use Case.

The AI Act incorporates a classification system that determines the level of risk an AI application poses to individuals' health, safety, and fundamental rights. It distinguishes between minimal risk AI systems, such as spam filters, and unacceptable risk systems, such as government social scoring or real-time biometric identification in public spaces. While this framework appears adaptable to future developments, it is already encountering its first challenge with text-generative AIs like ChatGPT. ChatGPT, for instance, lacks a specific use case and can be applied across a spectrum of risk levels, from unacceptable risk associated with generalized misinformation to minimal risk in facilitating operational processes like document drafting.

In the aviation industry, a supranational framework is being developed by the European Union Aviation Safety Agency (EASA) to ensure the implementation of AI regulations. This is reflected in the AI Roadmap 2.0, an updated version of the roadmap initially published in 2020. EASA has gained valuable insights from utilizing AI in aviation mainly from research initiatives, contributing to the formulation of this new roadmap, which aims to facilitate the effective and safe integration of AI within the aviation domain.

EASA's AI Roadmap 2.0 addresses several key questions that had not been fully answered in previous iterations. These questions encompass establishing public confidence in AI-enabled aviation products, preparing for the certification and type approval of advanced automation, integrating the ethical dimension of AI (transparency, non-discrimination, fairness, etc.) into oversight processes, and identifying additional processes, methods, and standards required to unlock the potential of AI and enhance air transport safety.

This living document, the AI Roadmap 2.0, will undergo continuous revisions to keep pace with technological advancements. EASA recognizes that successful integration of AI in the aviation sector requires collaboration and cooperation from all stakeholders across the aviation value chain. It is only through this collective effort that the potential of AI can be harnessed, and the aviation industry can benefit from its advancements while ensuring safety and compliance.

DATAPP, research to anticipate digital regulatory needs in the sector

Beyond AI, digitalisation from a more generic perspective is reshaping the aviation business at a quick pace, bringing efficiency and wider opportunities to manage information. The deployment of digital solutions throughout the air transport industry is a fact and brings significant changes to the traditional working processes, business models, standards, and regulations.

With this, EASA faces new challenges on what the required changes in safety standards and regulations are needed in response to the introduction of innovative solutions and processes. Anticipating what is to come in the industry in the field of data science applications is key to making sure safety levels are maintained without slowing innovation down. To anticipate such needs and as a regulator develop the necessary enablers to foster the adoption of such digital solutions, ALG is developing for EASA the project “DATAPP - Digital Transformation - Case Studies for Aviation Safety Standards - Data Science Applications”.

Under this project, ALG is investigating how digital solutions can be integrated into the new operational reality of aviation safety regulations for three strategic Use Cases. Funded by the European Union's Horizon Europe research and innovation program, this project will delve into the benefits, constraints, standardisation, and deployment issues surrounding this integration, emphasizing how standards should reflect the reality of options available.

Through three in-depth case studies, we will explore the use of flight training data, new methods and analytical techniques for fuel management, and data modelling to improve the use of flight data for safety. In doing so, we will be able to assess changes in aviation regulations, flight data collection and SMS, providing valuable insights into how digital solutions can improve safety management processes for aviation authorities, operators, and the industry.

As the aviation industry evolves, DATAPP is a step towards achieving the goal of integrating digital solutions into the new operational reality.

In the next posts, we will continue to report on the progress and insights of the project in each of the Use Cases. Let's continue to reflect together on how to advance the adoption of emerging digital technologies and the many opportunities that lie ahead.

To discuss the EASA DATAPP project or any digital challenge, please contact our digital team.