Safety reporting is one of the foundational outputs of the Risk Management System in aviation. Traditionally, its role was primarily reactive: analyze a report, understand what happened, and take action accordingly. However, the whole system encouraged by ICAO and other responsible institutions, is shifting towards a data-driven safety culture that prioritizes prevention over response. As aviation safety management systems are evolving from reactive to proactive approaches, the value that can be extracted from reports has also matured significantly.

Such a proactive approach relies on the systematic and continuous collection of safety information to identify and address potential hazards effectively. In the European Union, Regulation (EU) No. 376/2014 provides the framework to ensure this happens, requiring mandatory reporting of safety occurrences—events that endanger, or could endanger, an aircraft, its occupants, or others. The regulation introduces structured reporting with mandatory fields and the application of tools like the Event Risk Classification Scheme (ERCS) to standardize and prioritize safety data.

But here’s the challenge: While the system ensures robust data collection, its full potential often remains untapped. A truly effective reporting system doesn’t stop at tracking accidents or relevant safety occurrences; it captures a wide range of data, from safety incidents and near-misses to examples of positive reporting—where operations went exceptionally well. These reports contain valuable insights that can highlight major hazards and identify best practices. Let’s consider the ‘narratives’ field, as an example.

Narratives are more than just words on a page; they’re rich with context, human intuition, and granular details that structured data often misses. A detailed incident report written by a pilot or ground crew member can uncover the nuanced circumstances leading up to an event, reveal subtle hazards, or highlight effective mitigation strategies.

However, to unlock this goldmine of information, the data must be actionable. And this is where things get tricky: a significant portion of these reports comes as manually written narratives—which are free-text accounts that are rich in detail but lack standardization, making them difficult to analyze efficiently. Indeed, manually analyzing thousands of such reports is labor-intensive and prone to human error. This is where AI steps in.

The role of LLMs in unlocking safety data

Safety reporting processes are inherently human—built on judgment, experience, and written narratives. However, this reliance on manual inputs brings complexity, demands significant resources, and is prone to human error. That’s where Large Language Models (LLMs), such as OpenAI's GPT or Anthropic's Claude, come into play. These advanced AI tools have the potential to transform how we process and utilize safety data, unlocking valuable insights hidden within narrative reports with unmatched precision and efficiency. By enhancing our ability to analyze free-text data, LLMs offer a powerful opportunity to take safety reporting to the next level.

LLMs are advanced AI tools trained on massive amounts of text data, allowing them to understand, analyze, and generate natural language with remarkable accuracy. While generic LLMs like GPT or Claude are incredibly powerful, applying them directly to aviation safety reporting can present challenges. Why? Because aviation relies on highly specialized vocabulary, technical terms, and unique operational contexts that generic models might not fully grasp.

One solution might be to train an LLM from scratch using aviation-specific data. While this would ensure the model understands the field perfectly, the reality is far from simple. Building such a model requires enormous computational resources, time, and money—making it impractical for most organizations. However, state-of-the-art advancements in LLM technology offer a more practical alternative: fine-tuning pre-trained models and Retrieval-Augmented Generation (RAG).

- Model fine-tunning involves retraining an existing generic LLM using specialized aviation-related documents. In this way, the model introduces the specific aviation language into its knowledge, significantly improving its ability to analyze and generate text accurately within this domain.

- On the other hand, in RAG systems, the model retrieves relevant information from specialized aviation databases, integrating it into its responses and thus generating more contextually accurate and informed outputs while avoiding the necessity of model training. Both systems can be used in conjunction.

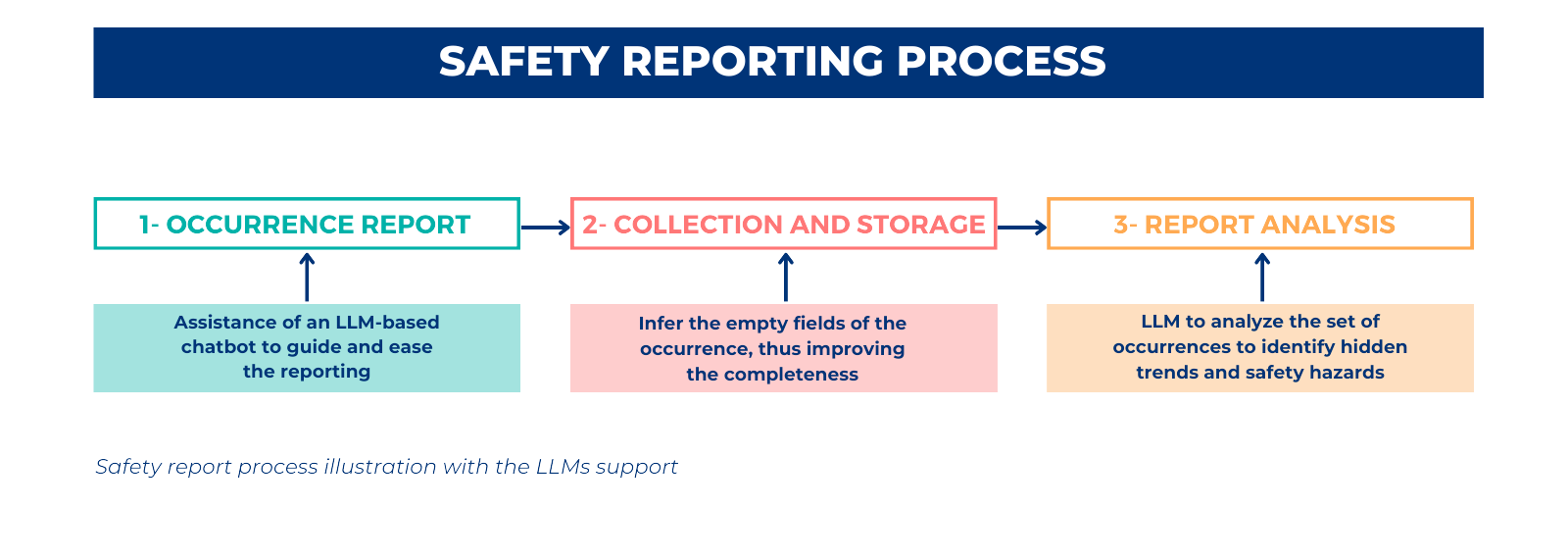

The best part? These two approaches can work together. Fine-tuning ensures the model understands aviation language, while RAG supplements it with up-to-date, specialized information—making the outputs even more accurate and actionable. Together, these advancements can make LLMs a powerful and efficient tool for the aviation safety reporting process for all stages of the lifecycle of a safety incident report: occurrence reporting, report collection and storage, and aggregated report analysis.

1. Occurrence reporting

The first step of the safety reporting lifecycle consists of reporting the safety event itself. In current aviation safety frameworks, an occurrence has numerous tabular, numeric, and textual fields to be filled out by the reporter of the incident, such that reporting can become a burdensome task. Moreover, some of the fields may or may not have to be informed depending on the type of incident, making the process complex and nuanced.

LLMs could be leveraged at this stage to assist and ease this process through the implementation of a chatbot which could guide the reporting entity or particular (e.g. the pilot) throughout the reporting process by asking the relevant questions to properly inform that specific incidence. This would ensure all relevant fields for the occurrence are informed in an efficient manner, leading to more occurrences being reported. Various LLM technologies play a role in developing an LLM-based chatbot such as Automatic Speech Recognition (ASR) or Retrieval-Augmented Generation (RAG). ASR is a voice recognition software to processes human speech and turn it into text, it would allow to simplify the interface with system by using the voice instead typing, greatly accelerating the process. On the other hand, RAG systems could be employed provide suggestions to fill in the fields based on similar incidents or provide relevant contextual information to fill in the report based on the nature of the incident.

2. Report collection and storage

Once the occurrence has been reported, the second phase in the reporting lifecycle takes place: processing the occurrences and storing them in a repository. This phase can benefit from LLM models by using them to impute empty fields from the occurrence. An LLM model capable of interpreting textual fields of the occurrence, together with other tabular or numeric mandatory fields, could infer relevant fields that were left empty in the previous reporting phase or identify potential errors in the reporting. Such assistance would automatically increase the completeness level and quality of the reported occurrences, leading to more actionable data and insightful analysis, enabling the identification of hidden trends or hazards to safety.

In addition to increasing the completeness level of the occurrences, LLMs could also improve the storage and collection efficiency by identifying incident reports referring to the same occurrence. Such repetition takes place when multiple entities are involved in and occurrence leading to multiple reporting entities reporting the same incident. LLMs could identify those cases by encoding occurrence fields and spotting duplicates or relevant similarities.

3. Report analysis

In the analysis phase of the safety reporting process, LLMs can play a transformative role by identifying trends, anomalies, and underlying safety hazards within vast amounts of occurrence data. Trained on extensive text data, LLMs can analyze reports and unstructured narratives to surface patterns that may not be immediately visible to human analysts. Such complex data analysis would require technological features such as clustering to group related occurrences and identify patterns, as well as text embedding to convert texts into vectorized representations usable by machine learning models. The analysis findings can be aligned to potential mitigation strategies such as regulatory actions, protocols, or operational restrictions. Similarly, targeted training programs may also be determined considering the safety-critical insights identified by the LLM model. A potential use case for targeted training would be that the LLM identifies a specific operational scenario that leads to a significant amount of incidents, thus making the risk management entity aware of this phenomenon, allowing them to steer a training program to prevent that type of incident.

Challenges and conclusions

Introducing LLMs into aviation safety reporting isn’t without its challenges. One key concern is the accuracy of their outputs, which heavily depends on the quality and completeness of the input data. Incomplete or poorly structured data can lead to inaccurate or misleading results, creating potential risks instead of mitigating them. Additionally, LLMs often function as "black boxes", meaning their decision-making process is not always transparent—a significant drawback in safety workflows where accountability and traceability are non-negotiable.

Yet, these challenges shouldn’t overshadow the immense potential LLMs bring to the table. By improving accuracy, automating repetitive tasks, and uncovering valuable insights hidden in narrative reports, LLMs could redefine how we manage safety data. Tools like fine-tuning and RAG are already bridging the gap, enabling models to become more context-aware and reliable while addressing some of the transparency concerns.

The road ahead is about balancing innovation with responsibility. As these technologies continue to evolve, so too will their ability to integrate seamlessly into aviation’s safety ecosystem. With proper oversight, collaboration, and iterative improvements, LLMs can become powerful allies in enhancing safety management—helping the aviation industry predict, prevent, and respond to risks with greater efficiency than ever before. The future of aviation safety reporting is both exciting and transformative, and the adoption of LLMs marks an important step toward a smarter, safer, and more proactive approach to safeguarding the skies.